One of my favorite things to come out of Software Defined Networking (SDN) is the concept of easily programmable stateful firewalls, realized by name in AWS as Security Groups. A name which, I think, could have been better chosen since it is both generic and overloaded in this industry. I doubt there is any hope in changing it at this point though, since the introduction of the VPC in 2009 the name has stuck around, and we're bound to confuse it in conversations with Active Directory at least once a year for the rest of our lives.

Because anything inside a VPC must have a Security Group assigned to it, this creates a great opportunity to move away from defining our rules using CIDRs, at least in many cases, and defining our rules to use references to other Security Groups. This often leads to the question of how many Security Groups should you use within your system or application?

The two extreme ends of the spectrum are:

- Use one Security Group that is shared by all resources

- Every resource uses its own Security Group

Example Scenario

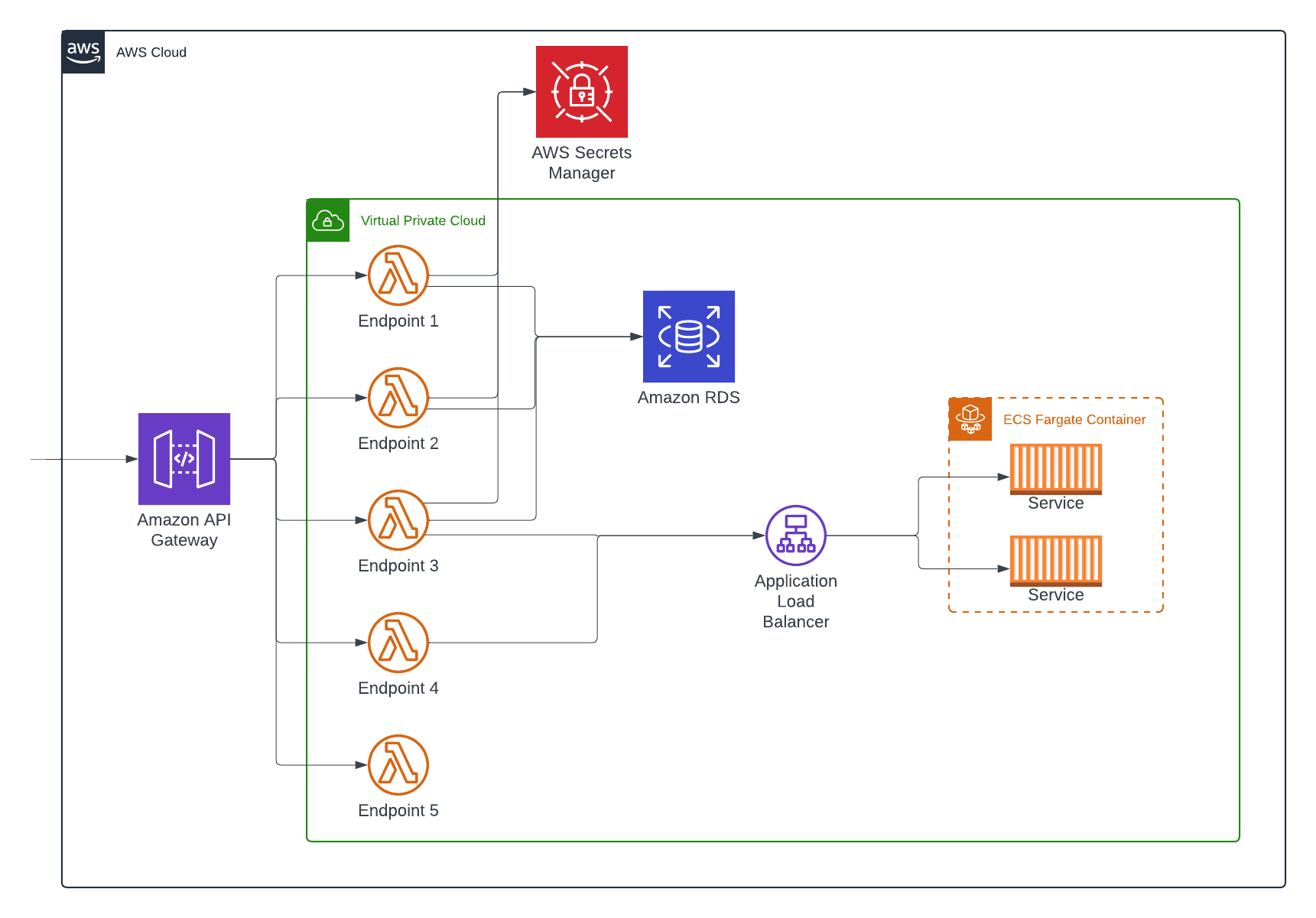

Our example will be an API. This could be publicly facing or internal facing, whichever you prefer, but an API nonetheless.

I've left out a lot of the details in this diagram to help focus on the components, but it is an API exposing 5 endpoints made using API Gateway and Lambda. It is backed by a relational database on RDS (we'll say Postgres so we can identify a port) and some other service hosted in ECS that is fronted with an Application Load Balancer (ALB). We'll also make the assumption that Secrets Manager is being talked to over the internet, so there is an Internet Gateway and NAT somewhere in our network that isn't shown on the diagram.

- Two of the endpoints talk to the database using password based authentication with the credentials residing in Secrets Manager.

- One of the endpoints talks to the service hosted on ECS through the ALB.

- One of the endpoints has an overlap of the above access patterns, talking to both the database and the ECS service.

- One endpoint is self-contained and does not talk to anything.

Using a Single Security Group

If we start at the first end of the spectrum we create a single Security Group and assign it to every resource in our VPC. This means that every Lambda, the database, the ALB, and the ECS services will all share the same Security Group.

And we can do that! It would be completely functional. What's more, we can still use references (albeit self-references). We would end up with a Security Group configured like so:

| Rule # | Port | From |

|---|---|---|

| 1 | 5432 | self |

| 2 | 443 | self |

| Rule # | Port | To |

|---|---|---|

| 1 | 443 | 0.0.0.0/0 |

| 2 | 5432 | self |

The first thing to notice here is that talking to Secrets Manager over the internet is really hurting our ability to be granular and use references in our rules. Since we need an egress rule over port 443 to the internet (0.0.0.0/0), tying the Security Group to itself over 443 for the purposes of Lambda talking to the ALB or the ALB talking to ECS is superseded by this rule.

This has nothing to do with the "one vs many" Security Group approach, but something to note overall. We'll remedy it a bit later.

The major downside of this approach is it's simply too open. The endpoint that only talks to ECS has the ability to talk to the database. The endpoints that only talk to the database have the ability to talk to ECS. The endpoint that needs no external resources can talk to both! We end up in a scenario where we're close to having an open network.

Every Resource Has Its Own Security Group

Shifting to the other side of the spectrum we would have a 1:1 mapping between resource and Security Group. I won't bother showing tables for these since we'd end up with 8 pairs of them and none of us would bother reading them in a blog, but this improves our security posture because we have the greatest amount of granularity.

Even the fact that we're talking to Secrets Manager over the internet, and we can't use a Security Group reference is better managed. Sure, the three endpoint Security Groups still need to allow egress over 443 to 0.0.0.0/0, but we have an additional layer in place because the ALB will only allow ingress traffic from the endpoints that need it.

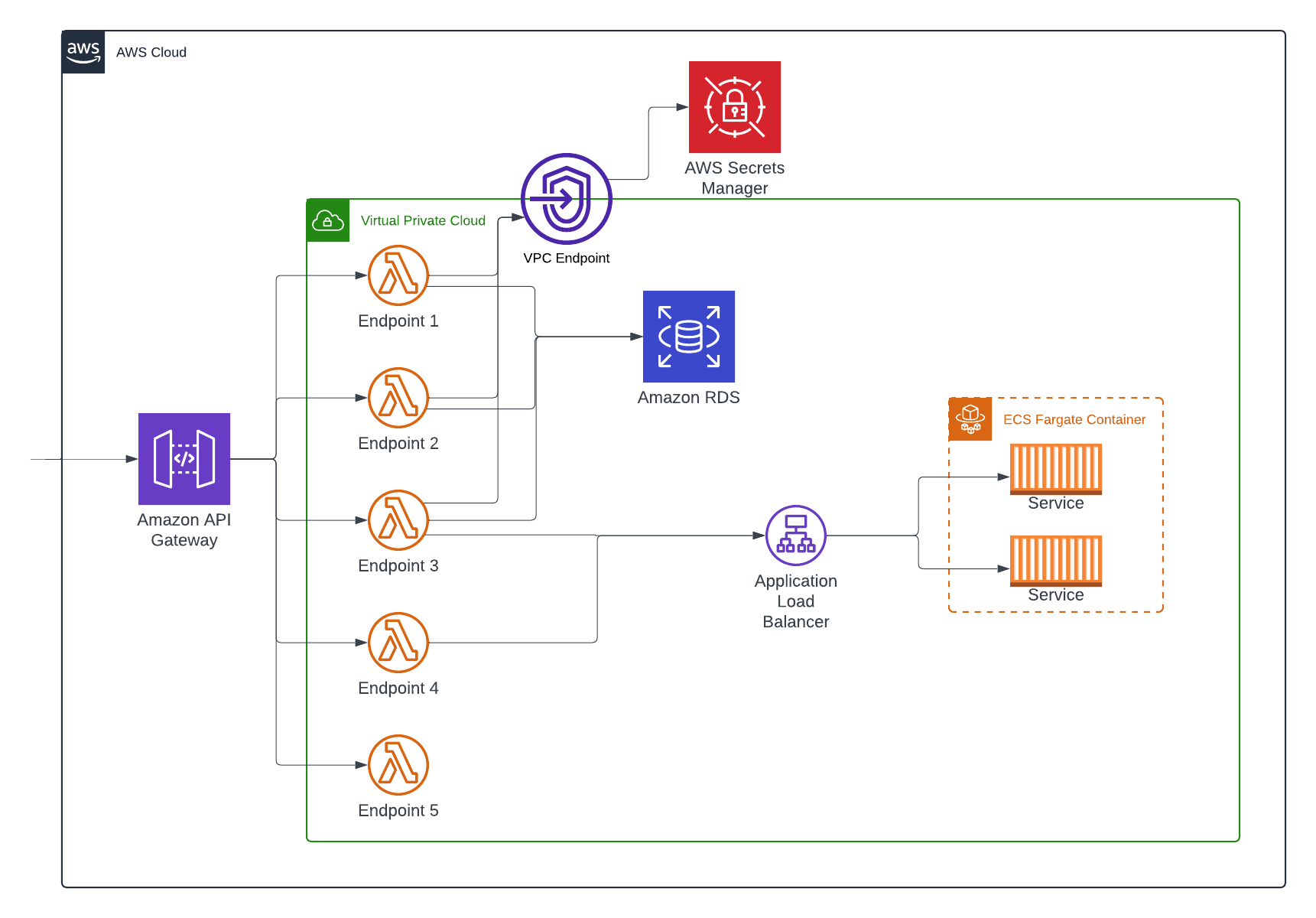

Lets go ahead and fix the network path for Secrets Manager though, we'll route it through a VPC Endpoint instead of the Internet Gateway. In this case it's an interface endpoint, which allows us to assign a Security Group to, so we can finally remove that pesky 0.0.0.0/0 rule:

Great! Now that that is in place we can adjust our Security Group rules. We first need to go to the Security Group assigned to every resource that needs to talk to Secrets Manager and update it to allow 443 to the endpoint's Security Group. Likewise, we need to update the endpoint's rules to allow ingress over 443 from each of those Security Groups.

And so we expose the downside of using a Security Group for every resource: overhead.

Suppose we try to alleviate that a little bit by relaxing our policy and updating the endpoint's Security Group to allow ingress from anything in our VPC's CIDR. That certainly helps, but now we have the possibility of hiding an issue. Maybe we missed updating the Security Group for one of the Lambdas. Everything would still function just fine, since the missed Security Group rule is very open. But what we think our configuration is no longer matches reality (aside: this is also what things like Config rules are for). If we were to make a change to our endpoint Security Group in the future we may inadvertently catch our mistake, potentially by way of a production outage. It's a contrived example, but it's these types of problems that happen more than we expect, and what causes us to be troubleshooting at 2AM on a Saturday so we don't break our SLAs.

The Alternative

We know the recommended solution sits somewhere in the middle of these two extremes. We want the granularity of individual Security Groups without the overhead of duplicating rules, to reduce the operational overhead while still maintaining the principle of least privilege.

What we're looking for is composition. The AWS Documentation alludes to this as well:

Create the minimum number of security groups that you need, to decrease the risk of error. Use each security group to manage access to resources that have similar functions and security requirements.

We define our Security Groups to encompass an access pattern. Then compose those Security Groups for a resource based on the access patterns it requires. So in this case, what are our access patterns?

- Access the database using credentials from Secrets Manager

- Access the service behind the ALB

- Access no services

Keep in mind, that we'll still have Security Groups dedicated to one resource. Take the ALB for example, there aren't any access patterns that would need to be shared there, it would have its own Security Group and it would be the only one to ever use that Security Group.

I frequently see this concept applied with IAM Roles, it's just the way the permissions get composed is slightly different. Many people are familiar with having a Role per resource, then composing managed IAM Policies on the Role, but doing a similar thing with Security Groups is something I don't see as often.